Biography

Associate professor (Maître de conférence) at the Université Aix-Marseille (Marseille, FR), my research focus on high dimensional statistics and dependence structure estimations, with various fields of applications. Before that, I held postdoctoral positions at York University (Toronto, CA) and at the UCLouvain (Louvain-la-Neuve, BE), and before that, I did my PhD at Université Lyon 1 (Lyon, FR), under a CIFRE grant with SCOR SE. Long time ago, I graduated in actuarial sciences at ISFA (Lyon, FR).

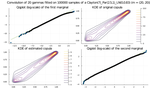

Fully qualified actuary, I do have a taste for numerical code and open-source software, and all my work is freely available online. For examples in various formats, you may take a look at this paper about high dimensional Thorin measures, this Julia package about copulas, this blog post about latex automation, this deck of slides about version control for academics. Other interesting papers, slides, packages and more are available below.

Interests

- High dimensional statistics

- Dependence modeling

- Code

- Actuarial Sciences

Education

-

PhD: Dependence structure and risk agregation in high dimensions., 2019-2022

ICJ & SCOR

-

Bachelor, Master and Diploma in Actuarial Sciences, 2015-2018

ISFA

-

Bachelor in Mathematics, 2012-2015

University of Straßbourg